Issue:

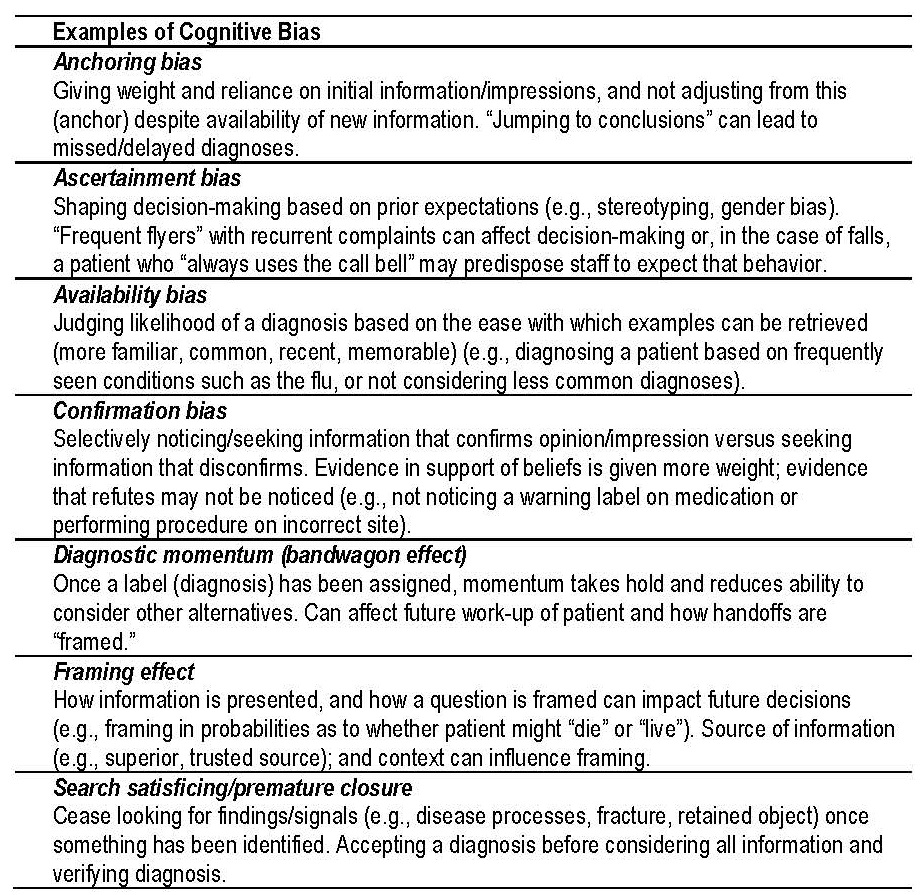

Inconsistently reported and therefore challenging to quantify, cognitive biases are increasingly recognized as contributors to patient safety events. Cognitive biases are flaws or distortions in judgment and decision-making.

Within events reported to The Joint Commission, cognitive biases have been identified contributors to a number of sentinel events, from unintended retention of foreign objects (e.g., search satisficing), wrong site surgeries (e.g., confirmation bias), and patient falls (e.g., availability heuristic and ascertainment bias), to delays in treatment, particularly diagnostic errors which may result in a delay in treatment (e.g., anchoring, availability heuristic, framing effect and premature closure). According to literature, diagnostic errors are associated with 6-17 percent of adverse events in hospitals, and 28 percent of diagnostic errors have been attributed to cognitive error.1

Two processes in thinking and decision-making help describe how cognitive biases manifest. The intuitive process, known as System I, is associated with unconscious, automatic, “fast” thinking, whereas the analytical process, known as System II, is deliberate, resource intensive, “slow” thinking.2 Fast thinking responds to stimuli, recognizes patterns, creates first impressions, and is associated with intuitions. It is where heuristics (shortcuts or rules of thumb drawn from repeated experiences and learned associations) are deployed to expedite thinking without expending much, if any, attentional resources.

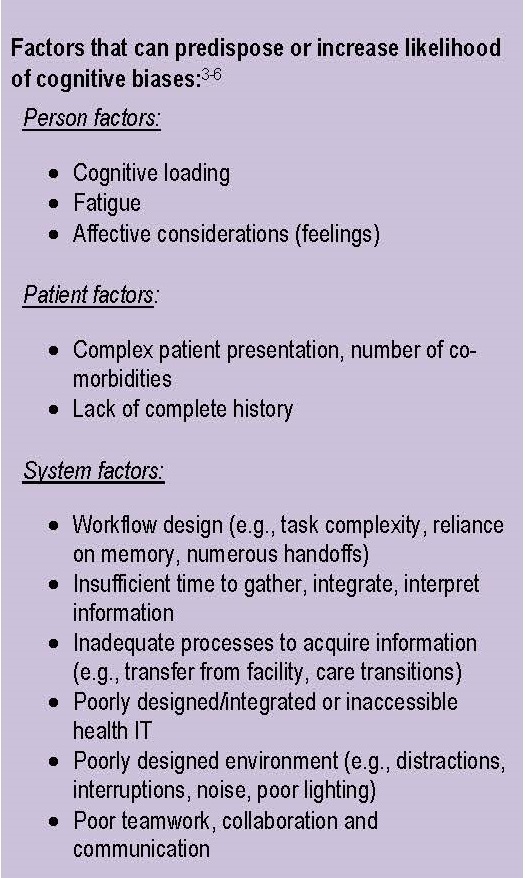

Much of life’s daily activities are performed using fast thinking, such as driving to work, recognizing facial expressions, and knowing that 2+2=4. These examples largely do not consume effort or draw from working memory. As such, fast thinking is often very useful, efficient and effective. However, it is imperfect and is predisposed to predictable pitfalls in judgment – cognitive biases. For instance, heuristics may be misapplied given incomplete information. They may cloud the ability to consider different alternatives or see other solutions, and can lead to inaccuracies regarding how common or how frequent occurrences are or how representative something is. This can, in turn, affect (or “set up”) the analytical process where reasoning and clinical decision-making occurs.

It is important for health care organizations to gain knowledge around cognitive biases and provide sociotechnical work systems that recognize and compensate for limitations in cognition, as well as promote conditions that facilitate decision-making.

Example cognitive biases

A patient with co-morbidities of renal failure, diabetes, obesity and hypertension arrived to the ED via EMS. Though the patient’s chief complaint was chest pain, it was reported to triage as back pain, a secondary complaint (framing effect). The patient was “known to the organization,” having been to the ED several times previously for back pain, and had been seen earlier that day for a cortisone shot (ascertainment bias). Triage assessment focused on back pain rather than chest pain (anchoring, confirmation bias, diagnostic momentum). The primary nurse began to prepare the evaluation using information from the triage indicating “back pain” (framing, diagnostic momentum) and did not independently evaluate the patient. The patient was found deceased a short time after arrival.

Safety Actions to Consider:

While mitigating the occurrence of cognitive bias can be challenging, health care organizations should consider the following strategies to help increase the awareness of cognitive biases and promote work system conditions that can detect, protect against, and recover from cognitive biases and associated risk.3-6

Enhance knowledge and awareness of cognitive biases

- Support discussion of clinical cases to expose biases and raise awareness as to how they occur (M&M meetings, reflective case reviews)

- Provide simulation and training illustrating biased thinking

Enhance professional reasoning, critical thinking and decision-making skills

- Train for and incorporate strategies for metacognition (“thinking about one’s thinking”)

- Practice reflection or “diagnostic time-out” which facilitate being open to and actively considering alternative explanations/diagnoses asking the question, “How else can this be explained?"

- Train for and incorporate systematic methods for reasoning and critical thinking (Bayesian model or probabilistic reasoning, mnemonics such as “SAFER”)

- Promote systematic method for presenting information to reduce framing effect

- Provide simulation opportunities to increase experience and exposure

- Provide focused and immediate feedback regarding diagnostic decision-making (why it was right or wrong) to allow insight into one’s own reasoning and recalibrate where needed

Enhance work system conditions, workflow design that affect cognition

- Promote conditions that facilitate perception/recognition/decision-making (e.g., useful information displays, adequate lighting, supportive layout, limited distractions, interruptions and noise)

- Limit cognitive loading, task saturation, fatigue

- Allocate time to review information, gather data, discuss case

- Provide access to/clarity of information (e.g., test results, referrals, H&P)

- Facilitate care transitions

- Ensure health information technology (IT) is usable, accessible and integrated within the workflow

- Facilitate real-time decision making and reduce reliance on memory (e.g., technology, clinical decision support systems, cognitive aids, algorithms)

- Promote inter- and intra-professional collaboration/teamwork to verify assumptions, interpretations, conclusions (e.g., communication/teamwork training)

- Design for error and build resilient systems that help detect and recover from error (redundancies, flagging critical lab values, triangulating data)

Promote an organizational culture that supports decision-making process

- Provide an organizational culture that support items listed above. Oro™ 2.0 provides tools and resources designed to guide hospital leadership throughout the high reliability journey. For more information, visit the Joint Commission Center for Transforming Healthcare website.

- Support a safe, non-punitive reporting culture to learn from near misses and incidents (how do cognitive biases arise, what strategies can be deployed to mitigate risk)

- Actively include consideration of cognitive bias in patient safety incident analysis to enhance understanding of how they contribute and can be mitigated

- Empower and encourage professionals to speak up

- Engage and empower patients and families to partner in their care, understand their diagnoses, ask questions and speak up.

Resources:

- Balogh EP, Miller BT, Ball JR. Improving Diagnosis in Health Care. National Academies Press (US). 2015.

- Kahneman D. Thinking Fast and Slow. New York: Farrar, Straus and Giroux. 2011.

- Henriksen K, Brady J. The pursuit of better diagnostic performance: a human factors perspective. BMJ Quality and Safety, 2013:22: ii1-ii5.

- Graber ML, et al. Cognitive interventions to reduce diagnostic error: a narrative review. BMJ Quality and Safety, 2012:21:535-557.

- Croskerry P, Singhal G, & Mamede S. Cognitive debiasing 1: origins of bias and theory of debiasing. BMJ Quality and Safety, 2013:0:1-7.

- Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Academic Medicine, 2003:78(8).

- Tversky A & Kahneman D. Judgment under uncertainty: heuristics and biases. Science, New Series, 1974:185(4157), p. 1124-1131.

- Ogdie AR, et al. Seen through their eyes: residents’ reflections on the cognitive and contextual components of diagnostic errors in medicine. Academic Medicine, 2012:87(10): 1361-7.

- Shaw M & Singh, S. Complex clinical reasoning in the critical care unit – difficulties, pitfalls and adaptive strategies. International Journal of Clinical Practice, 2015:69(4): 396-400.

- Norman G. The bias in researching cognitive bias. Advances in Health Sciences Education, 2014:19:291–295.

Note: This is not an all-inclusive list.